Roadmaps to Nowhere: Why Your AI Plan Isn't a Strategy

As we barrel into 2026, executives are scrambling to shore up their AI strategies. Most got by on storytelling, experimentation, and organic adoption last year. Boards and investors weren't expecting much, and management delivered.

The situation is different now.

Leaders must deliver results, and employees are tired of hearing about AI transforming their work only to find that little has changed. Sure, there are pockets of innovation (e.g., software engineering), but most people are stuck using janky chatbots, annoying AI scribes, and the modern incarnation of Clippy: Microsoft Copilot.

2026 is an inflection point.

Unfortunately, most AI strategies are doomed to fail. Not because of legacy systems, poor data quality, talent shortages, or employee resistance, but because leaders are obsessed with AI roadmaps.

Best Laid Plans

Earlier this month, I received a call from the CEO of a mid-market retailer seeking help with his AI strategy. It was a story I'd heard dozens of times. AI was disrupting his industry. His team had a few early success stories, but he wanted to go bigger in 2026. His CIO was drafting a request for proposal (RFP) that he'd send me soon.

I knew what the RFP would say before it hit my inbox. Stop me when this sounds familiar:

Identify AI use cases across the business.

Prioritize them based on impact and feasibility.

Construct a roadmap for the next 18–24 months.

I leafed through the RFP, deciding how to respond. I was up against large consulting firms like my former employer that would charge hundreds of thousands of dollars for the work. I was confident I could do the job for a fraction of the price.

In the end, I declined to respond. I understood why the CEO wanted an AI roadmap. His board and investors are demanding a concrete plan, and his CFO had empty cells in his budgeting spreadsheet. The RFP solved those problems, but it wouldn't put the company on a path to AI success.

Roadmaps aren't strategies; they're plans for getting from Point A to Point B. The challenge with AI is that nobody knows where Point B is.

Moving Target

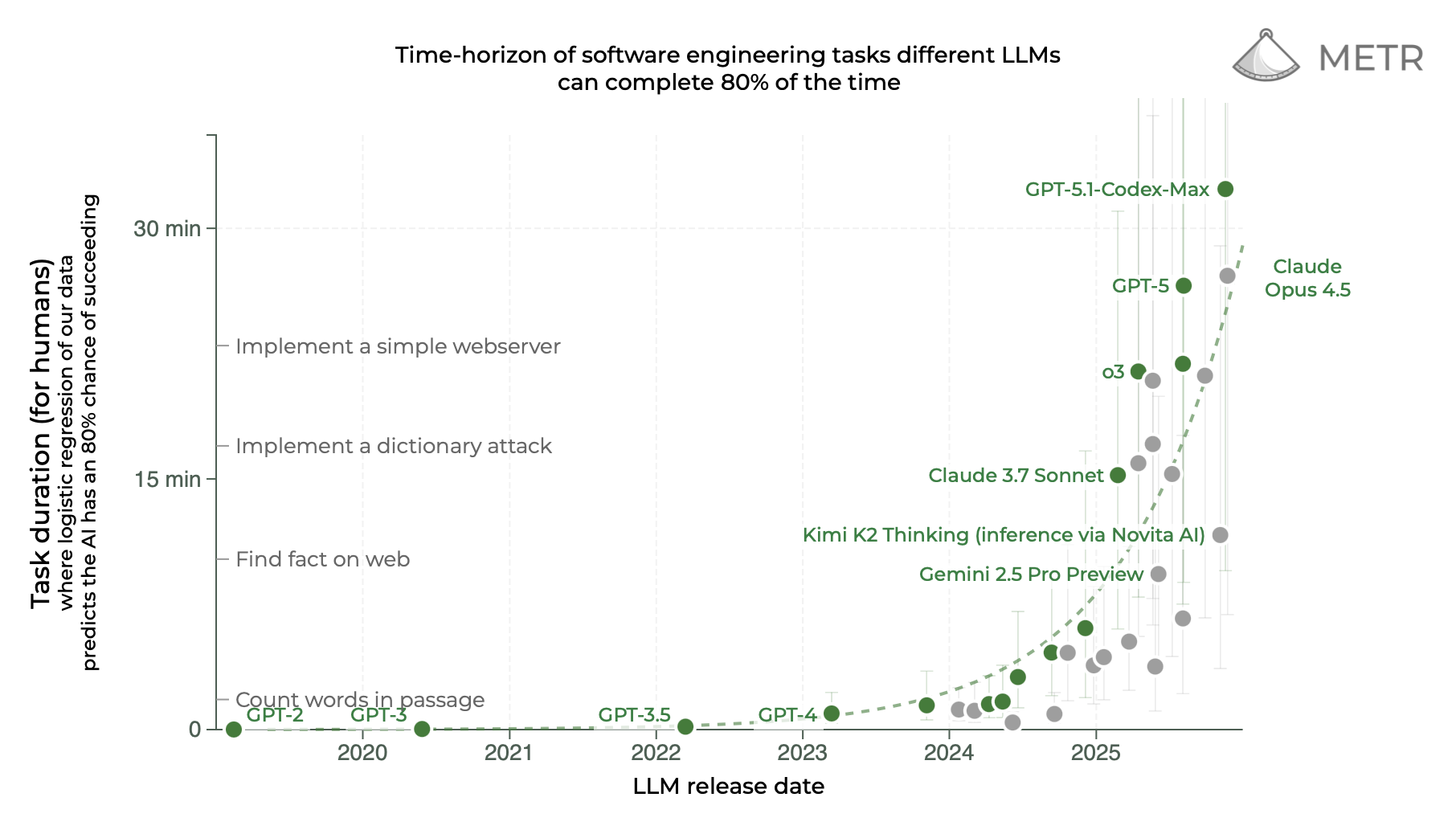

My favorite chart to show executives is the METR one that tracks AI's ability to complete long-duration software engineering tasks. Depending on which trendline you choose, AI coding capabilities are doubling about every four to seven months.

Source: METR

Imagine you constructed an AI roadmap at the end of 2024. At the time, AI coding agents could complete tasks equivalent to a few minutes of human work. Those were still the days of AI autocomplete, and CIOs were skeptical.

Fast forward a year, and coding agents (e.g., Claude Code, OpenAI Codex) can build complete applications from text prompts. It's not uncommon for an AI agent to work 30 minutes or more independently, pausing only to clarify requirements and gather feedback.

It's impossible to appreciate how much progress that represents if you don't use AI coding agents yourself. The trendline on the METR chart looks impressive, but most executives haven't internalized its meaning.

Here's how it felt for me.

I launched PurposeFund, an impact-investing platform that ranks every S&P 500 company across nine impact priorities, at the end of 2024. I built the site in a month with the help of AI coding agents. The result was fine, but getting there was rough.

Last month, I built AretaCare, an AI platform that helps caregivers stay organized. AretaCare is orders of magnitude more capable, scalable, and secure than PurposeFund. I had a working version in 48 hours, and I barely touched the AI's code.

The difference between PurposeFund and AretaCare isn't just speed and efficiency. PurposeFund is held together with hacks and hope. AretaCare, by contrast, is a real platform. It has a modern architecture, solid security, and proper error handling.

We're still on the steep part of the AI curve. The state of AI coding agents at the end of 2024 told you little about where they'd be a year later. If you think exponential progress is limited to coding agents, you're missing the point. Every AI capability is a moving target.

Predicting the future is a fool's errand. You can't roadmap your way to AI success, and doing so has real consequences:

Misallocated capital: Mindlessly investing in the wrong use cases, even after it's clear to everybody involved that there are higher and better opportunities (e.g., dumping money into internal chatbots that keep falling further and further behind ChatGPT and Claude).

Rigid ecosystems: Stubbornly sticking with platforms and vendors you know instead of experimenting with ones you don't (e.g., consulting firms that still insist on delivering PowerPoint pages).

Wasteful planning: Constantly churning timelines and requirements as AI capabilities evolve (e.g., scrambling to incorporate voice-based interfaces after multi-modal AI reaches a tipping point).

Frustrated employees: Sitting around waiting for IT teams to deliver something useful, all the while worrying about being fired for using personal accounts at work (e.g., vibe-coding with Lovable).

Roadmaps assume the world is predictable and controllable. It's not, and believing so is a recipe for misallocating capital, favoring incumbents, churning tech teams, and disappointing employees.

If you still think an AI roadmap is the path to success, ask yourself a simple question: Would the roadmap you drafted at the end of 2024 look anything like the one you'd draft today?

Why do you expect this year to be different?

Positioning > Planning

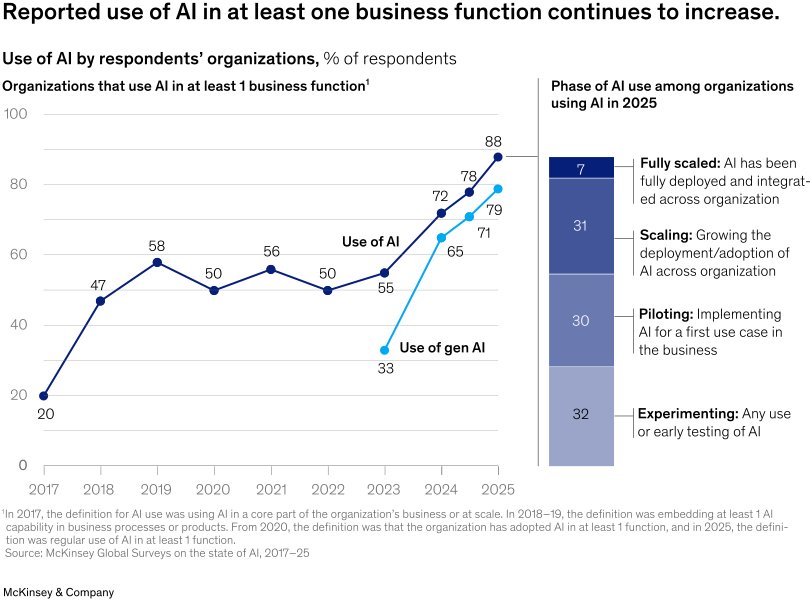

In McKinsey's annual The State of AI survey, 7% of respondents claimed AI was fully scaled across their organization. Another 31% said they're on the path to success. That's fine, but it's far too early to declare victory.

Source: McKinsey

I think about an AI strategy like catching a fly ball. The baseball just came off the bat, and you're trying to figure out where it'll land. Also, it's windy, it's raining, and the lights are in your eyes.

Should you break out your physics textbook and create a detailed plan for catching the ball? Or should you start running in the ball's general direction and course-correct as you go?

If you're building an AI roadmap, you're running around the outfield with a calculator. Even if you have perfect information — and you don't — your assumptions and calculations will be worthless in a few months.

AI strategy is about positioning, not planning. It's about making your best guess about where the ball is headed and moving in that direction. Every assumption you bake into a roadmap makes it tougher to adjust as new information arrives.

AI coding agents were just the beginning. Here are a few examples of disruptive shifts that could happen over the next two years:

New Devices: We still haven't found the best form factor for AI. Maybe it's a pin. Maybe it's a smart speaker. Maybe it's glasses. The one thing that seems clear is that it's not the smartphone or laptop.

Enterprise software: Traditional SaaS companies continue enjoying fat margins even as the cost of writing code approaches zero. Mass-market SaaS should eventually splinter, just as broadcast media did when social media burst onto the scene.

Model architectures: AI labs are hard at work solving big problems like continuous learning and world models. Think about how quaint GPT-3 seems today. Reasoning models were the most recent advance, but they won't be the last.

A breakthrough in any one of these areas would fundamentally alter the trajectory of AI. In this environment, mitigating risk doesn't come from tightening control. It comes from building organizations that can change direction without fracturing.

So be honest. Is your roadmap actually positioning you for the future, or is it just a slide you toss into the board deck so people stop asking difficult questions?

Catching the Ball

So far, I've only told you what not to do. My rant isn't of much use without an alternative. What should you do in 2026?

Successfully positioning your organization for a range of possible futures requires three things: (1) weaving AI into the fabric of what you do, (2) removing sources of friction, and (3) making a handful of big bets.

Start by ditching the concept of AI use cases. Your team is already juggling enough priorities, initiatives, metrics, and targets. Push them to use AI to do the stuff they're already doing better, faster, and cheaper.

Here are a few examples:

Stretch targets: Set ambitious targets for your core metrics (e.g., first-call resolution, sales conversion, product availability) that go beyond what's in the budget. Make these stretch targets high enough that teams can only hit them with AI's help. Don't penalize teams for missing their stretch targets, but recognize and reward those that do.

Accelerated timelines: Revisit initiatives planned in the pre-AI era. That multi-year IT implementation shouldn't take 18 months. Push the team to complete it within six months, using AI for tasks like documentation, customization, migration, and testing. You might break something along the way, but the extra time gives you a margin of safety.

Low-cost expertise: Do you have any consulting projects slated for this year? Is there any harm in letting AI take a first pass? Even if you don't love the output, you'll have a baseline for comparison. You might even be surprised how often the AI answer is "good enough."

Use cases are direct investments in technology. The above examples are indirect investments in teams, capabilities, and innovation. The approach is messy, but so is the state of AI right now.

Not every team will succeed. In fact, most will struggle. But that struggle is the point. Building new capabilities is frustrating, and learning comes as much from failure as from success. Encourage teams to experiment now while the cost of failure is low; it won't be that way forever.

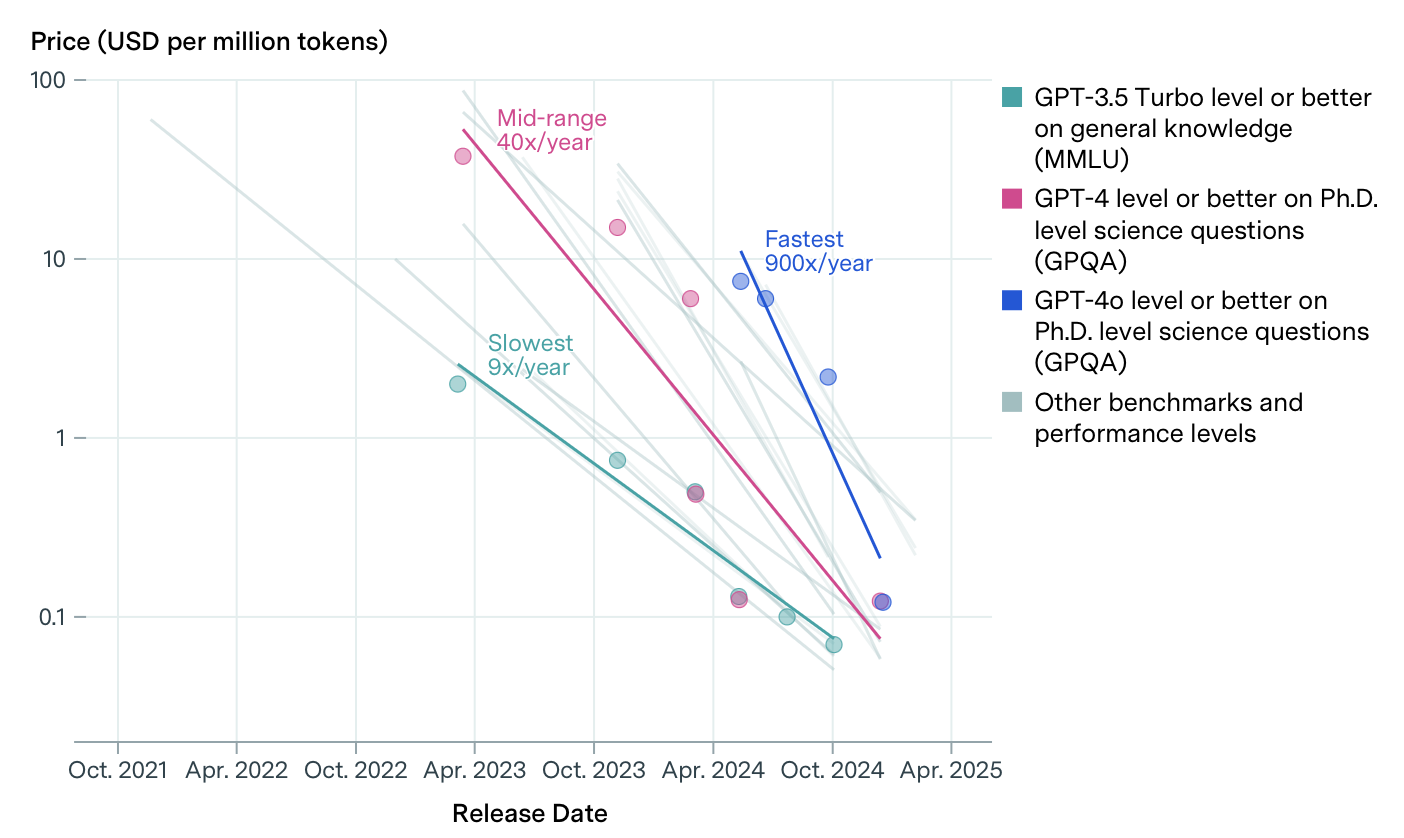

Next, aggressively remove barriers to AI adoption. One of the easiest things you can do is make sure people have access to the best models. The gap between frontier models (e.g., GPT-5.2, Claude 4.5 Opus, Gemini 3) and cheap models isn't worth the tradeoffs. Plus, the AI cost curve only bends in one direction: down.

Source: Epoch AI

Don't pinch pennies. Give your teams access to the best general-purpose models and platforms you can find. If you're getting AI for free through existing software providers, you're getting what you're paying for.

Here are a few more examples of common AI friction points:

Salary bands: Can an employee managing five AI agents make the same money as one managing five employees? Make sure employees have an incentive to manage AI agents.

Walled gardens: IT teams have development and test environments for a reason. You don't want people experimenting with AI in production systems, but have you given them an alternative?

Technical support: Who does an employee call if they want to know which OpenAI API key to use for an agent they're testing? Helpdesks should do more than reset passwords and provision laptops.

When writing code was the bottleneck, it made sense for every request to flow through IT. Teams could do very little on their own, and the risk of breaking something outweighed the upside. That's changing, and now is the time to get ahead of the curve.

Finally, make a couple of big bets. These aren't incremental use cases like what you'll find on an AI roadmap. They're far more ambitious.

What's the core IT system that runs your operations? Start building an AI-powered replacement.

What's a new product or service that could double your revenue by 2030? Start building it with AI.

How would an AI-native competitor disrupt your business? Start building that company before they do.

Imagine it's 2030. That trendline on the METR chart kept going up, and not just for software engineering. Are you excited or terrified?

If you've embedded AI into your business, made it easy for employees to adopt it, and executed on a few big bets, you're likely ahead of the curve. If you dutifully followed your AI roadmap, you've probably built a chatbot, RFP analyzer, and agentic pricing tool.

Roadmaps are how you wind up stuck in 2025.

The instinct to build AI roadmaps is understandable. Boards need to hold management accountable, and executives need to manage risk. Roadmaps are the natural consequence of those pressures.

The problem is timing. In a period when AI is advancing faster than any planning cycle can keep up, roadmaps offer the appearance of control and predictability without delivering either. They may calm stakeholders, but they also lock in assumptions that will age poorly.

The good news is that there's plenty of value on the table. You don't need to get everything right to hit your impact commitments. Rising AI capabilities and falling costs mean today's business cases should look conservative by summer, assuming you can adapt and ride the AI tailwinds.

When the dust settles, capabilities flatline, and ecosystems mature, every company should build an AI roadmap. Point B will finally come into focus, and strategy will give way to execution.

That time isn't now. Stop planning. Start positioning.